Authors:

Dan Bolnick (University of Connecticut)

Stacy Krueger-Hadfield (University of Alabama at Birmingham)

Alli Cramer (University of Washington Friday Harbor Laboratories)

James Pringle (University of New Hampshire)

While on the Isle of Shoals at the Training and Integration Workshop for the Evolution in Changing Seas Research Coordination Network, we were asked to serve on a panel about the Challenges of integration - importance of language and frameworks in interdisciplinary collaborations. We received interesting questions from the audience (mostly students and postdocs) that revealed to us some general concerns about how to collaborate, that might benefit from a summary available to a broader audience, hence this post. Here, we begin by describing some of our interdisciplinary collaborations as examples. Then we provide a general how-to guide, beginning with how to start by finding collaborators, how to set up agreements to manage expectations, and how to avoid common pitfalls.

What do we mean by ‘interdisciplinary collaboration’?

It is possible to have endless debates on the true nature of interdisciplinarity. Perhaps it is best to say collaboration with people who can provide skills or perspectives that lie outside your core competency. It is collaboration with folks not just for their individual insights alone, but for their broad background. As a means of introduction, the panel discussed their collaborative projects.

Dan: My PhD and postdoctoral training were very much focused on core topics in evolutionary ecology, touching on subjects like speciation, maintenance of genetic variation, selection arising from species interactions. I liked the idea of interdisciplinary collaboration, and I was able to observe some from a distance (my PhD mentor, Peter Wainwright, hired a postdoctoral researcher with a fluid dynamics engineering background to work on fish feeding). But I didn’t know where to begin: who to reach out to, and how to bridge fields. I had many collaborations, but most were with people in similar departments to my own. In the past decade I’ve tried to hire a more intellectually diverse set of postdocs, bringing together evolutionary biologists with geneticists, immunologists, and cell biologists, to try to generate some synergy. But recently I’ve established a few collaborations that have really stretched my boundaries. A couple years ago I received a Moore Foundation grant to collaborate with an engineer and an immunologist (the latter a former postdoc from my lab) on studying host-microbe interactions using microfluidic chip artificial guts. The visit to Dr. Rebecca Carrier’s lab in engineering was a genuine thrill, to see how engineers approached a problem. Then last year I received a grant with a computer scientist (Dr. Tina Eliassi-Rad) and statistician (Dr. Miaoyan Wang) to study the evolution of transcriptomic networks. These collaborations are fantastic because I really get to branch out and learn a little bit about entirely new fields, expanding my own horizons. And hopefully the projects will yield exciting insights that wouldn’t have been possible had I tried to tackle this on my own.

Stacy: I was trained as an evolutionary ecologist. To a certain extent, working at the interface of ecology and evolution necessitates some level of interdisciplinarity and collaboration. I’ve been smitten with algae since a phycology course as a senior at Cal State Northridge. My two PhD supervisors - Myriam Valero and Juan Correa - taught me a lot about collaboration and a more holistic approach to a rather fundamental question in biology - how and why did sex evolve. While I was part of several projects that were interdisciplinary in nature, I really began to explore ‘interdisciplinary’ collaboration and working with colleagues with distinctly different skill sets as an Assistant Professor at the University of Alabama at Birmingham. For example, I have had the opportunity to be part of an ANR-funded group called CLONIX-2D. While the threads of partial clonality connect everyone in CLONIX, we’re combining researchers with very different skill sets and focal taxa. This approach has the opportunity to yield breakthroughs, but presents challenges with language. What does one group mean by such and such? How do we define the jargon that is inherent to different taxonomic groups? Are we separated by a common language? Funnily enough, the CLONIX group is mostly French, with the exception of myself and Maria Orive as the North American delegation. So, we are, to a certain extent, separated by language before we even delve into biological vernacular. As an extension of CLONIX, the coordinator, Solenn Stoeckel, and I started talking about better methods and descriptive statistics for haploid-diploid taxa. Our musings occurred at a small conference - in another lifetime before COVID - and in thinking back as I help write parts of this post, such impromptu, serendipitous chats can lead to brilliant collaborations. Nevertheless, Solenn’s theoretical population genetic doodles were and are nothing if not daunting. I had to first conquer that feeling of intimidation and figure out a way to communicate where existing tools fell short for my day to day data analyses. Not only did we have to cross a barrier between French and English, but also in scientific terms and concepts (red algal life cycles are not for the faint of heart). Bridging our linguistic gaps isn’t something that we figured out overnight and is still a work in progress. I think a good collaboration - whether in your specific field or in a totally different discipline - will continue to grow with time. Solenn and I have produced two papers (here and here), with a few more in the pipeline - all started from idle musings over coffee. Clear precise language is worth its weight in gold - from the outset of a collaboration to the point where you begin to see your work to fruition (i.e., a paper).

Alli: My Masters and PhD training were interdisciplinary as a matter of course. As a PhD student in an inland environmental science department, incorporating management and societal concerns were the norm and, moreover, I was the only marine ecologist in my department - in nearly the entire university. This interdisciplinary environment worked well with my research focus: ecoinformatics and integrative ecology. My specialty involves synthesizing disparate data sources to ask new questions, or test old questions in new ways. While a PhD student my peers were limnologists, hydrologists, data scientists and engineers. Discussions with them about data sets and the ubiquity (or not) of lakes shrinking led to an interdisciplinary project synthesizing data from over 1.4 million lakes into a publicly available data set - the Global Lake, Climate, and Population data set. Other interdisciplinary projects I pursued were founded through the EcoDAS Symposium; a workshop aimed at connecting early career researchers in the aquatic sciences. Through this workshop I worked on an integrative ecology paper testing Grunbaum’s (2012) scaling predictions and a social-ecological frameworks paper discussing marine no-take zone management. My current research for both my postdoc and the RCN are both interdisciplinary - one at the intersection of community ecology, geology, and hydrology and the other connecting genetic and spatial aspects of population connectivity. In my experience, interdisciplinary collaborations are where new and exciting questions lie. To find them, seek out existing frameworks which connect researchers across disciplinary lines, such as EcoDAS or an RCN.

Jamie: In my case, I am trained as a physical oceanographer and have approached biologists to satisfy my curiosity about how species can persist at a place in the face of currents that sweep their offspring away. In turn, I have attracted the attention and collaboration of biologists and chemists who are curious how ocean circulation affects the things they study. Once you are known for broad interests and attain a reputation for putting effort into collaborations, it is easy to attract more collaborators. Before then, you will have to initiate collaborations and then follow through on what you have started. My best collaborations have been with other scientists who are genuinely interested in how the ocean affects their system, as opposed to those who want to figure out how to set up controls or systems which effectively eliminate the impacts of the ocean’s flow on the system they want to study. There is nothing wrong with the latter strategy – but it is less intellectually interesting for me.

Benefits of collaboration

Some of the themes that emerge above (and from our group discussion) are that collaboration outside of your own area of expertise brings quite a few benefits. From a strictly intellectual standpoint, merging distinct perspectives and skills provides synergistic insights that can lead to new ideas, or to conclusions you might otherwise miss. Or, collaboration may provide a new combination of technical know-how to acquire or analyze data in new ways. Financially, collaboration can enable access to a more diverse set of funding opportunities by making it possible to apply for research grants from multiple agencies (e.g., NSF and NOAA) or different divisions within NSF. Within NSF for instance there are programs like the Rules of Life that specifically require interdisciplinary collaborations (defined as having co-PIs who are normally funded by entirely different directorates within NSF). Lastly, setting aside the utilitarian issues, collaboration can be great fun. It is a chance to make interesting new friends and learn from each other. If the collaborators are from far-flung places, you then may get to convene group meetings at interesting places. Collaborations that begin with curiosity and continue to produce joy are much more likely to lead to interesting results than those that are put together for purely utilitarian reasons.

Some risks

Collaboration is not always good, whether interdisciplinary or not. Luckily all four of us have been blessed with many excellent collaborators; this is more a response to an audience question than a commentary on our own direct collaboration experiences. Collaborators may turn out to be uncooperative, unresponsive, or unpleasant. Many of these risks depend on your career stage. As a student, big collaborations may not be ideal as this is the time to build your foundational area. Rushing to collaborate may stretch you too thin, when you need to be establishing your name as the go-to person on a particular subject. Moreover, large collaborative efforts often take time to produce products. These might not align with a student’s degree progression and milestones. Let’s say you start a collaboration that relies on another lab to generate some key data that you need to interpret your own results, or to build some piece of technology. If they don’t deliver quickly, you may be left waiting a long time for their product before you can proceed. That’s time that faculty can often spare, especially once tenured, but can be awful for a time-constrained fifth year graduate student. Some of these concerns likely also apply to post-docs, fresh out of their PhDs that need papers and maybe can’t wait years for products. Similarly, for assistant professors, too much collaboration may not be seen as ‘independent enough’, posing problems for promotion and tenure review. As the P&T season begins anew, there are some interesting social media posts on tenure packets (e.g., Holly Bik’s thread). One piece of advice I (Stacy) received when putting together my dossier last year was to have a table where you and your lab members’ contributions to each paper were clearly outlined and described. A table like this makes such information, especially if you are not a first, last, and/or corresponding author abundantly clear. An added bonus when the table is completed is to see just how much you and your lab have accomplished in the last few years!

How to get started

The hardest part of starting a collaboration is finding committed collaborators. You can find potential collaborators at conferences: making a connection is certainly easier when we’re in the same venue and can chat at a poster session or over a meal. If you are proactively interested in starting a collaboration you might even consider going to a meeting outside your core discipline (e.g., Jamie, an oceanographer, coming to a meeting of biologists and geneticists). Other options include word of mouth, Google searches for key words, reading the literature in the area in which you wish to expand, or asking a colleague in that field. You might even use social media to put out a call for collaborators in a topic. Serendipity can play a role too, chance meetings outside of normal academic settings, or incidental connections between third parties. As a specific example, Dan’s collaboration with a computer scientist (Tina Eliassi-Rad) and statistician (Miaoyan Wang) began with a couple of these. A former student (Sam Scarpino) was new faculty at Northeastern University in the same Network Science institute as Tina, and in a zoom call to brainstorm a NSF Rules of Life proposal Sam suggested Tina would be a great addition to the team (and she is!). Then looking for a statistician, Dan used some google scholar and google searches to figure out who was doing cutting-edge, well-cited work on statistical analyses of networks, and found Miaoyan’s name. Serendipitously, a former postdoc had just met Miaoyan (both being recent hires at Wisconsin) and recommended her. A couple of emails later and we had some zoom meetings arranged, and six months later had the grant funded!

One key consideration is making sure your new collaborator(s) has the time and resources to hold up their part of the bargain. You are asking someone to step outside of their day-to-day role, and that takes effort and commitment, and may not be strategically in the best interest of their career. So don’t be offended if they don’t have time for you, but neither should you collaborate with someone who won’t be responsive, or if you will not have time to be responsive. (NOTE: we aren’t implying any of our collaborators are an issue in this regard, this is in response to a question from the panel audience). A simple test is to be sure that this new collaborator puts some skin in the game. A good way to start is with a proposal of some sort – if they do not contribute to both their part of the proposal and communicating to improve other parts of the proposal, they are unlikely to be good collaborators in the long run. If writing a grant proposal together, require real text contributions and editorial effort. If that doesn’t materialize, heed the warning that red flag is sending and maybe find someone else. If that new and also unknown collaborator isn’t putting time in now at the grant writing stage, they maybe aren’t really committed. This is a hard pill to swallow and a tough lesson to learn down the road when there are more entanglements and it becomes harder to get out unscathed. Do not hesitate to say no to requests for collaboration if you do not have the bandwidth to contribute fully or if the other person has a likelihood of not contributing fully. And if someone isn’t contributing, move on to find a new collaborator. Better to “dump” someone on the first date, so to speak, than to commit and change your mind once you are funded. A caveat here is that grant writing is a great litmus test for faculty, but not always an option for graduate students.

You're in the same r(z)oom ... now what?

The contract: One strategy to ensure collaborations will be successful is to start, from the outset, with collaboration agreements, outlining participants’ roles and expectations. Initial meetings of course will identify what each person is expected to do, but often these are done informally leading to verbal agreements that can later be forgotten. Even if it seems overly formal, we cannot stress enough the value of written agreements. It keeps everyone honest. In one multi-PI project Dan is involved in, which spans over a dozen PI’s lab groups, each PI on the team contributes a Scope of Work document (a template can be found at the end of this post), that specifically outlines each person’s obligations - what data or tools or written products will be delivered, to who, and when, with what funding. That way each person’s role is on paper, and if someone else tries to join in you can check their proposed scope of work against the many existing scope documents to make sure there’s no redundancy / conflict. It’s a great way to avoid the “I thought you were doing that” conversation later in the game. Note, each collaborator provides their own unique scope of work document detailing their particular contribution. This can be supplemented with a Collaborative Agreement, again a formal written text, that lays out more universal (rather than lab-specific) expectations. This is especially important for interdisciplinary collaborations where you are bringing together people from different fields who therefore have different cultural traditions about things like authorship, ‘good journals’, etc.. Things to put in a collaborative agreement may include:

Rules for who does/does not get authorship and how authorship order is determined.

A list of expected papers to be written, with lead authors designated in advance, if possible. Collaborations are fluid and may change over time, so regular updating of expected papers is a good idea.

Target journals - your interdisciplinary collaborators may have never heard of your favorite journal, and may not gain career benefits from publishing there (e.g., will a physicist’s tenure committee say “The American Naturalist, what is that, some kind of naturist newsletter?”)

Expectations for data storage as it is generated - is it shared with everyone on a server as the data are produced, or only when complete?

Data archiving

What are the procedures for conflict resolution (whether intellectual or interpersonal), who adjudicates disagreements about authorship, interpretation of results, or even harassment

What if someone does not deliver on their task in a timely manner - at what point (and how) are they to be replaced by a new collaborator who will deliver?

Who can use what data, once it is generated?

Who is writing what grants and when

Code of conduct, field safety, lab safety,

When a manuscript is almost ready, does everyone need to sign off to approve submission (yes, good idea), and what happens if someone takes months to do so, or refuses to?

Language barriers: In any collaboration, it is important to clearly and precisely explain terminology. Language is the means of communicating the information and lack of precision can lead to confusion. When you try to cross intellectual boundaries, whether they be across biomes or disciplines, language is critical. In a paper about the origin of the alternation of generations, David Haig elegantly described this challenge: “vocabulary … can be deceptively familiar: familiar because we use many of the same terms; deceptive because these terms are used with different connotations, arising from different conceptual and theoretical assumptions”. Striving for useful and precise definitions is not only essential for research, but also successful collaborative projects. It is a feature, not a bug, of collaborative science. Often, the most novel and innovative aspects of a collaboration lie in the space where our assumptions differ.

As an example, the ‘Selection across the life cycle’ group from the 2019 Evolution in Changing Seas workshop encountered barriers to easy communication from the get-go. What did we even mean by a ‘complex life cycle’? A life cycle? A life history? Were life cycles and life histories merely synonyms? Were we tying ourselves up in knots over a semantic argument? We couldn’t make sufficient headway in our broader questions about selection across the life cycle if we didn’t all agree on what a life cycle was, let alone a complex one. We discussed what ultimately became Box 1 in Albecker et al. (2021) while on Shoals and in subsequent Zoom meetings. This certainly helped Stacy argue the point that life cycles and life history traits are not the same thing more broadly beyond the scope of our working group! And, these discussions were invigorating if not sometimes frustrating when you are limited by words that inadequately describe what you think makes sense in your own head.

Semantic confusion abounds. Even evolutionary ecologists, of which most at the recent workshop on Shoals identify as, one word may mean many things. Semantic stress becomes even more acute when you cross into entirely new disciplines. For example, biologists speak entirely different languages to computer scientists - so how do you learn to communicate? For instance, Dan’s Rules of Life award has him collaborating with Tina Eliassi-Rad at Northeastern. Tina hasn’t had a biology class since high school, and Dan’s never had a computer science class nor a class in network science. Starting the collaboration included Dan having to learn a lot of basic network science terms, and in many cases this involves biologists and computer scientists using different words for the same thing. Think about a gene expression database with individual animals as rows, different genes as columns. Dan might use terms like “individuals” or “replicates” for the rows, and “genes” or “dependent variables” as the columns, plus “independent variables” in the metadata, but a computer scientist might use “instances” and “features”. It has taken some practice getting comfortable with each other’s terminology. Meanwhile Tina asked for some basic introduction to genetics and RNA.

Not only is common terminology essential for collaborative research, but so is a common framework. As another example from the Evolution in Changing Seas RCN, the ‘Connectivity’ working group Alli is a part of had to tackle different frameworks about how populations are connected. In this group, much of the language was the same but even when terminology was reconciled fundamental differences between how evolutionary, genetic-focused researchers saw populations vs how spatial, ecology focused researchers saw them required us to develop a new temporal framework to effectively communicate. By having a guiding structure for how our points of view connected with one another we were able to make progress on our larger goal of using data to test connectivity assumptions. In this case the confusion arose in closely related fields of ecology and evolution. In interdisciplinary collaborations, where intellectual frameworks differ almost by definition, taking the time to develop a clear theoretical outline for hypotheses helps develop research questions and facilitates group member communication.

Sometimes in collaborations conflicts can arise. Often, not surprisingly, conflicts center around terminology, while shared frameworks are being developed. It helps for all team members to expect some tension and have some expectations for how to deal with it. When developing collaboration agreements it helps to set expectations for a minimum level of participation. Setting this clear from the get-go lets group members play on the same level. When conflicts arise between group members, it helps to focus on the group as a whole, rather than the individual members. Some phrases to use might be things like “how does our group feel about [blank definition]?” or “Is this something the project can resolve, or is this a larger issue within your disciplines?” These kinds of questions can refocus group participants. These questions are also useful to ask yourself, if you ever find yourself planting your flag on a semantic hill. (Of course, once you have developed your common vocabulary, it is important to explain it in your publications – remember your confusions and conflicts, for they are what you must explain later and will often be some of your most valuable contributions.)

Off to the races... or off the rails

Interdisciplinary collaboration comes with a series of logistical challenges and considerations. For example, money is ALWAYS an issue. We need money for travel, meetings, and salaries. While COVID might have necessitated comfort with virtual meetings, there isn’t really a replacement for being in the same place at the same time.

Like any relationship, collaborations take time and work to be successful. The biggest factor is that everyone involved meets regularly to stay engaged. The more time you commit, the more connected you are intellectually and the more you feel you need to succeed in generating a product. So, frequent meetings are crucial, weekly or monthly. These will likely usually occur via zoom, if you are in far-flung places. In the case of Dan’s Rules of Life collaboration, this hinges on a weekly reading club about network analysis of biological data, reading papers from biology and CS and statistics. This zoom meeting began with just the core team but grew to include more people as we found it helpful to bring more conversationalists to the table. We preserve one week a month for project-specific discussion.

The weekly meetings should be supplemented by periodic (once or twice a year) in-person meetings. These help develop personal connections and friendships that cement the motivation to help each other. They make you focused for a few solid days just on the group’s goals and progress, which is often more efficient than the hour-per-week that is easily missed or forgotten. And there are huge benefits from the creative and more free-wheeling conversations that may happen after the official work day ends. These meetings are especially valuable to students and postdocs; it will provide them more of a network to rely on in the future and people to seek help from in their current work.

Pay people for work they do. Academics are notorious for unpaid labor because it is culturally expected and because it yields later benefits in terms of promotion. We often see scientific publications as a reward in and of themselves, because this is the currency that builds our reputation and career to advance to the next step of the academic ladder. But, this is a position of privilege to be able to devote that time and whenever feasible it is best to pay people for work done. This can be challenging though because the collaboration may not have funding or barely enough to just collect the data. Moreover, expectations for pay vary by fields: computer scientists with extensive options in the private sector tend to be better paid than many other scientists (though ecology and evolution students are waking up to the fact that their statistical programming skills are marketable outside academia).

Fields may differ also in expectations for data sharing and archiving. Some fields require public data repository archives to publish, others view these as suspect, a way for ‘data parasites’ to pirate information and scoop authors of hard-won results (note, this is a very very rare thing; far more common is the lab or agency with too much data who would be happy to help someone do more with their collected data, especially if given appropriate credit).

Exiting a bad collaboration

Sometimes collaborations fizzle out. Maybe a grant is declined or a research direction fails to yield results that keep members of the collaboration invigorated and enthusiastic. Other times, collaborations may sour. There is no easy way of extricating oneself from a bad collaboration. Walking away may not be a choice for an early career scientist, but do you stay or do you go? While hindsight is 20/20, having an agreement about data sharing, the scope of work, and the collaboration philosophy may prevent problems arising or provide a mechanism to deal with problems.

So, what if a collaboration really does go deeply wrong and you realize this isn’t working, then what? How do you extricate yourself? If this is simply a matter of your losing interest, then we’d say you should bite the bullet and deliver on the things you promised to your collaborators or find someone to replace yourself who will be faster and more eager. But sometimes you start a collaboration and then discover that someone isn’t contributing their share. Or, they are too unpleasant. Or there’s sexual or racial harassment, or bullying. There’s no single answer here, as this depends on such a delicate balance of the specifics of the severity and nature of the problems, the benefits to persisting in the collaboration, and the career risks of pulling out (e.g., funding lost, people in power offended, etc). But, if you find yourself in a troubled collaboration, first talk to someone you trust for advice. If you decide you’d rather walk away from the collaboration, the best thing to do is to (1) do it sooner rather than later, (2) be firm but polite, (3) offer a solution to the folks left behind. For instance, finding someone in your field with similar expertise who is willing and able to put up with whatever drove you out, so you don’t leave a gap in the team you exit. This helps reduce any resentment that might ricochet back to you later. Then you need to negotiate whether you have done enough to retain some authorship later, and whether you want it, and how to financially extricate yourself.

Who publishes this stuff anyway?

One of the challenges with interdisciplinary collaborations is identifying useful products of any project. For example, even if all participants are academics the meaningful and useful journals for group members in which to publish may be different. The publishing and peer review norms may be at odds - in mathematics, for example, proofs are often published on personal blogs, or in small notes. There is no formal peer review for these projects and citing this work in journals can be difficult. Yet the longer timeline of evolution and ecology fields can be stifling for many collaborators who are used to faster turn-around. To a computer scientist used to writing a paper a month with new software, the time-line for field work and RNA extraction and transcriptome sequencing and data filtering and read calling may seem puzzlingly slow.

Selecting the correct journal should be discussed early within collaborations, and function as a dialogue as the project progresses. Since collaborations take time, professional goals may change and the “best” journal at the end may not be the initial journal selected. Of course, one hope is that by doing interdisciplinary work, one generates more innovative scientific results that are publishable in interdisciplinary journals that transcend any one field, which can be good for everyone (if successful).

If collaborators are outside of the academy, mutually beneficial collaborations may require creating more than one product. For many agency collaborators products like publicly available data sets, or data papers, can be a good alternative to traditional academic papers. Many agencies have specific rules on journals and data repositories, so following these procedures for data papers and data sets gives agency collaborators more control over their output. In a recent project, KelpRes, in addition to traditional peer-reviewed papers, the team also made an infographic documenting subtidal kelp forest habitat in Ireland. The types of products at one’s disposal are somewhat limitless and collaborations that go beyond the academy may open your mind to other ways of science communication.

__________________________________________________________________________________

Scope of work template

This serves as a document to resolve any future disagreements. The more detail you fill in concerning methods and data collected, the better we can ensure non-redundant / competing work.

Newcomers to the project should fill in a SoW for their proposed contribution, which should be reconciled with prior SoWs.

If you are doing more than one distinct project that require different samples, file separate SoWs for each question/topic.

Replace red text below with your own information.

Some collaborative ethics comments:

Carving off more than you can chew is uncool; other members of the group may be counting on you to complete your SoW to get data to interpret their own work. So please propose to do only that which you honestly think you can complete.

That said, it is okay to distinguish between what you definitely will do (a commitment to other team members), versus what you aspire to do. The latter may depend on funding and time availability. But, the aspirational part is just hypothetical and so your prior claim to that work is less strongly established than the core part of your SoW. If it becomes clear you are unable to follow through (e.g., a grant isn’t funded), it behooves you to tell collaborators in case someone else can do it or can help you with funding.

Scope of Work

YOUR_NAME

DATE

DESCRIPTIVE TITLE

1. Question / Hypothesis:

2. Brief explanation of context: WHAT IS NOVEL, RELATIVE TO THE LITERATURE THAT EXISTS. WHY IS THIS INTERESTING

3. Specific Aim # 1:

i) Rationale/Goals BRIEF SUMMARY; Distinguish between what you are committing to do, versus what you aspire to do.

ii) Methods

Fish Sampling summary |

|

|

|

|

|

# fish per sample | Which populations? | Sample frequency (#/yr) | Sample duration (# years) | Lethal sampling? | Preservation method | Traits measured |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

iii) Analysis/Interpretation BRIEF

iv) Publication plan

What/when

Authorship:

Additional data required from others:

Must wait on other papers’ prior publication?

Must be published before other papers?

v) Ballpark budget. Note, enumerating these can help identify redundancy/efficiency and also forces us each to be more realistic about what we can (afford) to do

Salaries to pay personnel |

|

|

|

|

Position | # months/yr | # years | Cost | Duties |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Total salary costs: |

|

Travel |

|

|

|

|

|

|

# of trips per year | # of years | # people per trip | Duration | Cost per person |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Total travel costs: |

|

|

|

|

|

|

|

|

Field supplies |

|

|

|

|

|

|

Item | Quantity | Cost each |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Total field costs: |

|

Equipment needed |

|

|

|

|

|

|

Item | Quantity | Cost each |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Total equipment cost |

|

|

|

|

|

|

|

|

Laboratory costs |

|

|

|

|

|

|

Activity | # samples per year | # samples total | Cost per sample | Cost per year | Cost total |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Total Lab cost |

|

vi) Existing funds:

vii) Plan to acquire funds

__________________________________________________________________________________

Publication and data sharing agreement (example)

1. Preface

We are on this project together because we know and trust and like each other. That mutual respect is the core of the collaboration and is essential to the project’s success. In light of this mutual trust, a set of written agreements may feel awkward and overly formal. It may appear to imply a lack of trust. We think of these agreements more as a form of open and explicit communication, where we lay out our respective aims, aspirations, rights and responsibilities clearly to each other. In so doing we help avoid miscommunications and misunderstandings that can be the downfall of large collaborative projects.

2.

Organization

2.1 Fundamental Commitment of participating researchers: Our project is an inclusive network of researchers that agree to these basic ground rules: (1) to collect data accurately and in a timely fashion, (2) to ensure the data are collected as laid out in the Project’s protocols and Scopes of Work, (3) to openly share data associated with the project, and (4) to publish high-quality collaborative papers.

2.2 Administration: The project is currently administered by a committee chaired by ____ with _____ (___ team representative), ____ (_____ team representatives), and _ (____ team representative), with input from all PIs with approved Scopes of Work.

3. Code of Conduct

3.1 Data collection: All participants agree to collect data accurately, following WGEE protocols to ensure that resulting data can be merged among labs. If you wish to collect additional data that is fine, please add that to your Scope of Work (see below) and a clear protocol. If you wish to suggest changes or improvements please feel free to contact the project coordinators. Data should be collected in a timely manner so that other researchers relying on your data products can proceed with their own work.

3.2 Data storage. Data collected for the project will be archived as hard copy paper or as original files (e.g., photographs) to ensure against file corruption. Data files will be stored in backed-up and version-controlled systems such as GitHub, and shared with group members. Data storage will follow best practices (e.g., https://library.stanford.edu/blogs/stanford-libraries-blog/2020/04/ten-tips-better-data-while-you-shelter-place).

3.3 Data use: Data will be available to registered project members once reviewed and uploaded to the archive by the project managers. Data will be made publicly available upon publication. Requests for access to data prior to their public release will be reviewed by the coordinators and the relevant data collectors to ensure the proposed use does not conflict with ongoing analyses, publications, or proposals as laid out in Scopes of Work (below).

3.4. Data citation: Any participant is free to use WGEE data for publications, courses, presentation, etc. if they follow guidelines for co-authorship listed below, and if their publication does not undermine another participant’s publication goals as laid out in the Scopes of Work.

4. Scopes of Work

4.1 The goal of the Scope of Work is to clearly define what each person’s scientific and logistical role in the project is. This is a document where you indicate what samples you will collect, what data you intend to generate, and what paper(s) you intend to publish, and how these goals will intersect with other people. It is wise to include specific biological questions, and specific publication plans where feasible. This description, once reviewed by the rest of the group, is a time-stamped reflection of your plans, which both represents a set of rights (nobody else should publish the papers you plan on doing), and responsibilities ( you should to the best of your ability follow through on your SoW).

It is important that you keep this reasonably achievable; if any one person claims more than they are able to deliver then this creates a gap that undercuts the overall team. If you fail to deliver on a dataset you planned to generate, after a reasonable time (TBD) it is possible for the group as a whole to consider assigning this to another participant. This will only be done in exceptional circumstances, when the group determines that the data are necessary for the collective goal, and you have not made a good faith effort to make sufficient and timely progress towards generating the data.

4.2 You may revise the SoW as project goals evolve, but please do so using track changes / comments at first, so relevant team members can review and comment on your updates to the SoW. When you make changes, please notify the overall group and save the original to an archive folder. Team members will be asked to update their scopes of work at least annually, or as changes arise.

4.3 New team members will be expected to submit SoWs of their own, which need to be approved by the group, with a particular eye to ensure we are not generating excessive overlap with existing SoW plans by someone else on the team. The exception is if someone with an existing aim is willing to remove that from their own SoW.

4.4 The SoWs are kept here:

These include:

PI Topic with link to SoW

5. Data sharing and publication standards

________________

Data Sharing and Publication Agreement: Many of our projects are going to yield papers that use multiple lines of data generated by different labs. The goal of a Data Sharing & Publication Agreement is to formally define expectations for who can publish what data, when, and who has a right to authorship. These are sometimes used by Editors to adjudicate publication disagreements, so having one worked out and digitally signed in advance, and archived, can prove valuable later, though we all hope this proves unnecessary. Participation in WGEE and use of WGEE data implies a willingness to abide by the following norms.

5.1 Co-authorship of articles using unpublished data: If you contribute data to the WGEE Project, you will automatically be included as a co-author on any papers using your data prior until such time as the data are made publicly available. We encourage true collaboration – authors should actively seek engagement from those who collected data they are using from the start of the project, and data collectors should provide feedback and insights. Final authorship assignment is the responsibility of the lead author. Of course you may opt-out of co-authorship at any time by informing the lead author.

Authorship order: The first author will typically be the researcher who has done the most to analyze the data and write the text of the manuscript. The last authors will typically be PIs who have been involved in conceiving and overseeing the overall project. Middle authors will be people who contributed minority shares of time to data collection, analysis, or writing. Within these three categories, the order will be determined by discussion in advance of submission. Disagreements will be adjudicated by the group of PIs. Where people have equal claim to authorship roles, the order may be determined by (1) randomization, or (2) a staring contest, or (3) other fair procedure agreed upon in advance by all parties.

5.2 Co-authorship of articles using publicly available data: We strongly encourage authors who re-use archived data sets to include as fully engaged collaborators the researchers who originally collected them. We believe this could greatly enhance the quality and impact of the resulting research because it draws on the insights of those immersed in a particular field.

5.3. Informing participants of intent to write an article: If you wish to write a paper using Gatekeeper data, a working title and abstract should be submitted to the Project Coordinators prior to drafting the manuscript. This will be shared with other project participants for review. Individuals interested in becoming contributing authors of the proposed paper must contact the lead author directly. As stated above, we strongly encourage active engagement of project participants.

5.5. The Scopes of Work define expected publications, and the PI responsible for a given publication topic has the right of first refusal to write the relevant paper. They may designate a student or other lab member to take on the data collection, analysis, and authorship tasks. They may waive that right for a member of another lab to take on that topic, or a closely related one. If a manuscript is proposed and subsequently abandoned for > 6 months, interested Gatekeeper participants are encouraged to discuss with the lead author about taking over the development of the manuscript.

5.6 Each data file will have a Readme.txt file linked to it, with relevant metadata, and who collected and curated the data (“data owners”). The data owners have the right to be included as a co-author on any paper(s) using that data file. This right may be waived after them data file becomes public (e.g., after first posting on a public repository associated with a publication), but it will remain preferable to include the data owners as co-authors when the data they are responsible for represents a significant contribution to the results of a given manuscript.

5.7 Co-authors are expected to give constructive and thorough comments on analyses, interpretation, and writing. This is especially pertinent after a dataset is first published (e.g., for later papers, generating already-public data is a weaker claim to authorship that should be bolstered by active intellectual participation in the writing).

5.8 Co-authors must be given reasonable time to read and comment on a paper before it is submitted for review, and it should not be submitted to a journal without their consent. If a co-author fails to respond to comments within a reasonable time frame (to be agreed upon during manuscript preparation), the remaining co-authors may (depending on the context) remove the non-contributing co-author from the authorship list. Or, if (for instance) they contributed extensively to data collection, for example, the manuscript’s author contribution statement may indicate that the person in question only contributed to data collection.

5.8 Authors on papers should be able to explain the main findings and how they were arrived at, and vouch for the results insofar as they contributed to them via (a) conceiving of the research question(s), (b) sample acquisition, (c) data generation, (d) data analysis and interpretation, (e) writing. We will use Author Contribution Statements on articles to clearly identify participant’s roles without overstating: when an author is listed as contributing to a particular task in generating a paper, they are vouching for and responsible for the accuracy of that element of the paper.

5.9 Data will not be shared with outside parties without the full group’s consent, and especially not without consent of the data owner(s).

6. Disputes: if someone is not listed as a co-author, who believes they have a right to be co-author, may appeal to the group of PIs, or to the journal Editor in question. Participation in the project implies consent to abide by the above rules, and add co-authors as expected, but also to contribute to manuscripts to earn and retain authorship rights.

7. The entire collaborative team operates on a position of trust that (a) the other team members are respectful of their expertise, contributions, and career goals, and (b) data contributed by team members are accurate and correct.

SIGNATURE PAGE:

By typing your name below, you indicate that you have read the above document and relevant Scopes of Work, and agree to abide by the terms of this document.

Name: Date read

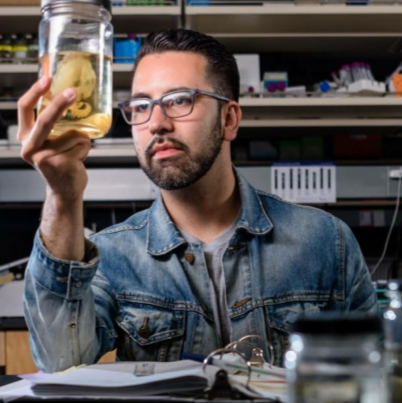

Southeast LA and San Bernadino born and raised, Bryan, describes how his low-income background drove him to design novel mathematical approximations to tackle complex science problems (jumping in frogs), as an alternative to using expensive equipment that may have been financially inaccessible. He also explains how his Latinx background prepared him to spot genuine mentors and allies, which have now blossomed into solid friendship. Finally, Bryan touches on experiencing culture shock as a Latino in academia and how EEB departments can support their fellow Latinx academics.

Southeast LA and San Bernadino born and raised, Bryan, describes how his low-income background drove him to design novel mathematical approximations to tackle complex science problems (jumping in frogs), as an alternative to using expensive equipment that may have been financially inaccessible. He also explains how his Latinx background prepared him to spot genuine mentors and allies, which have now blossomed into solid friendship. Finally, Bryan touches on experiencing culture shock as a Latino in academia and how EEB departments can support their fellow Latinx academics.